Retailers, join our live Facewatch demonstration and learn from leading retailers how Facewatch has worked for them.

Places are limited, please click here to register.

The Grocer reports supermarket bosses saying theft levels are ‘off the charts’ and it’s only going to get worse. According to the Office for National Statistics, 89% of adults in Great Britain reported an increase in their cost of living in August 2022, while household incomes are expected to fall in 2022.

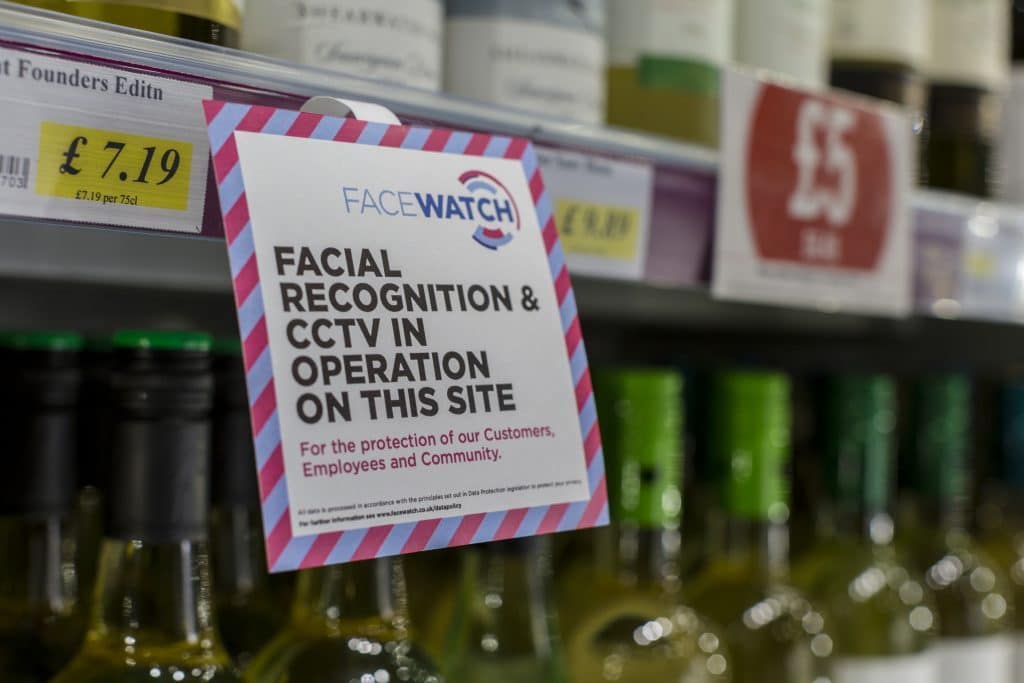

The cost of living crisis is getting worse and it’s predicted that crime will continue to increase. As a retailer, you have a right to protect your business, employees and customers using technologies available to you, providing they are lawful.

Facewatch is fully UKGDPR compliant and proven to reduce crime by discouraging subjects of interest from entering your premises. You can expect substantial reductions in financial loss, typically 50% after 6 months, plus your frontline workers will tell you they feel safer where Facewatch is deployed.

Facewatch know, through interviews with their clients, that traditional security such as CCTV, tagging and human guarding is ineffective and doesn’t deter crime. It only records it happening and can create unnecessary conflict in store when aiming to recover stolen items. There’s no evidence to support these traditional methods reduce crime over time and that’s why more retailers are turning to Facewatch.

Facewatch would like to invite UK retailers to join our live webinar demonstration.

You can see how easily database are created, how SOI’s are detected and how the data is lawfully shared. We’ll be joined by two retailers who have deployed Facewatch across their large estates over the last 3 years. They will share how Facewatch has worked for them, the ROI they’ve delivered and information from their reporting dashboard that they now use to make operational decisions, including footfall monitoring and SOI trend patterns.

Attendees will be invited to submit questions during the webinar and we will attempt to answer as many as possible during the session.

Please click here to register your interest